Sequence to Sequence Learning with Neural Networks

关键词:LSTM,Seq2Seq

? 研究主题

采用深度神经网络DNN

使用LSTM,并翻转输入句子顺序提升性能

✨创新点:

- 更换seq2seq中RNN单元为LSTM,有提升对长句子训练速度的可能性;

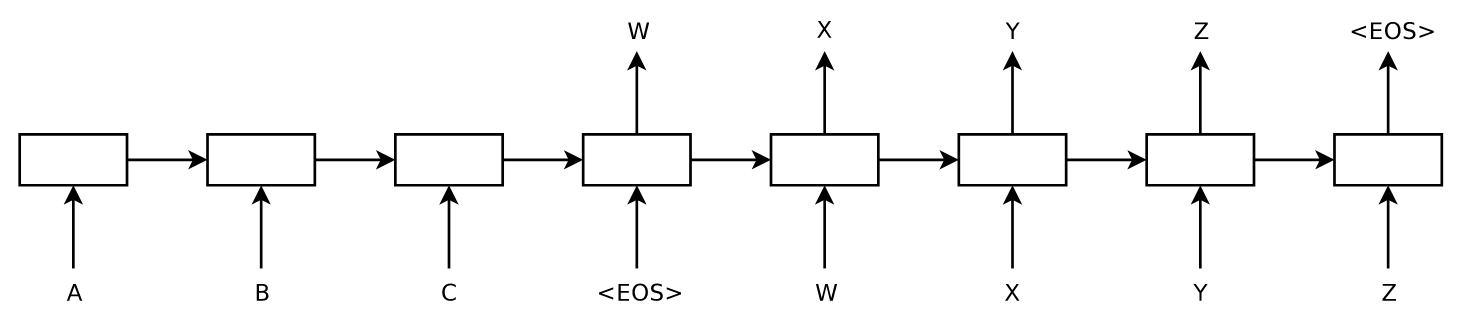

- 使用2个LSTM,一个作为encoder,一个作为decoder;

- 使用4层LSTM搭建模型;

- 反向输入句子,提升了LSTM的性能

? 讨论&解释

LSTM单元

相关博客:https://zhuanlan.zhihu.com/p/32085405

翻转句子提升性能的解释

“However, the first few words in the source language are now very close to the first few words in the target language, so the problem’s minimal time lag is greatly reduced.” (Sutskever 等, 2014, p. 4) (pdf) ?然而,源语言中的前几个单词现在与目标语言中的前几个单词非常接近,因此问题的最小时间滞后大大减少。?

“Thus, backpropagation has an easier time “establishing communication” between the source sentence and the target sentence, which in turn results in substantially improved overall performance.” (Sutskever 等, 2014, p. 4) (pdf) 因此,反向传播可以更轻松地在源句子和目标句子之间“建立通信”,从而显着提高整体性能。

? 设计与实现

模型结构

暂未实现,不予讨论。。。

- Sequence Learning Networks Neural withsequence learning networks neural learning networks neural hello pre-train learning networks neural networks learning neural comp graph condensation networks neural understanding plasticity networks neural networks neural bigdataaiml-ibm-a introduction decoupling networks neural depth convolutional networks neural cnn powerful networks spectral neural